Open-source

named – keyword arguments for Haskell

A lightweight library for named function parameters (keyword arguments) based on overloaded labels. Keyword arguments have several advantages over positional arguments:

- they can be supplied in arbitrary order

- their names serve as documentation at call site

- it is impossible to accidentally mix them up

Unlike newtype wrappers, keyword arguments don't pollute the global namespace, don't require top-level definitions, and don't need to be exported.

nix-cabal – a script to run cabal inside nix-shell

A small wrapper script for Nix users. Usage: nix-cabal v2-build all.

shower – a prettifier for structured data

A tool for pretty-printing structured text – e.g. JSON, or Haskell

data types. Especially handy for types with broken Show

instances (like UTCTime or UUID).

See a demo at the project page.

Library

These are some of our clients' questions along with our answers. We publish them when we have permission to do so and when the answer has a chance to be useful out of the consulting context.

2019

Is this typeclass for rank-2 functors defined anywhere?

Client

Client

I just sketched out a typeclass sig that roughly matches what I'm looking for... Anybody recognise this?

class ZFunctor (m :: (* -> *) -> *) where

zoist :: (forall a. b a -> c a) -> m b -> m c

It's not Functor, HFunctor, MFunctor or MonadTrans, but it's very close. The usecase is that if you have some data Foo m = Foo { doStuff :: m String } dictionary of functions, if there was a ZFunctor Foo then you could zoist a Foo m into any Foo n if there is an m ~> n.

Even this would be useful:

class IFunctor (m :: (* -> *) -> *) where

ioist :: MonadIO n => m IO -> m n

Indeed it's a functor, but it's not an endofunctor. You won't find a class for every sort of functor out there. There's Control.Categorical.Functor that covers most of them, type IFunctor f = Categorical.Functor f (:~>) (->) as far as I can tell.

Additionally, the first class you're asking about is used in two contexts: the higher-kinded data pattern which seems to be your exact use case, and in the more general context of rank-2 classes (there is also Traversable, Distributive etc): Rank2. The latter library also allows to derive instances via Template Haskell. ZFunctor there is called simply Functor.

2018

Using Aeson with field names like "data"

Client

Client

We have a data type that needs an Aeson encoder / decoder, but one of

the field names in the JSON payload is "data", which is a reserved

keyword. How can we specify a custom field name? (Without writing a

custom manual instance.)

Just name your field data_ and then use custom Aeson options:

instance ToJSON Foo where

toJSON = genericToJSON defaultOptions

{ fieldLabelModifier = unmangle }

instance FromJSON Person where

parseJSON = genericParseJSON defaultOptions

{ fieldLabelModifier = unmangle }

unmangle :: String -> String

unmangle "data_" = "data"

unmangle "type_" = "type"

...

unmangle x = x

Stopping profiling from inside the app

Client

Client

Our app has a big cost during startup that I'd like to

ignore in the .prof output. Is there a way to send a

signal to the process to tell it to forget all its profiling

information to date?

stopProfTimer and startProfTimer in GHC.Profiling

might work. Allocation info will still be collected, but timing

info (ticks) will not.

Network-less mocking with Servant

Client

Client

I have an HTTP service which, from the point of view of my code, I see only as a Servant client.

[...] Now I want to mock out the service, i.e. I want to implement another interpreter for my HTTP effect, which – instead of making a call to a distant service – will return whatever strikes my fancy. Generally I am willing to change large parts of my architecture, as all three solutions I have come up with are horrible.

-

One is to parse the

Requestand do routing myself, which is error-prone and stupid. I should be able to use Servant for this. -

The other one is to spin up a Servant server and make it do the routing. The problem with this is that in my property tests, when I try to spin a new server for every function call, there is some issue with resource acquisition by kernel or

Network.Waiand we run out of ports and everything dies. -

Or I could write a lot of boilerplate code and define another effect which would represent an RPC call. Then basically almost copy and paste this GADT, make it a Servant type, and have two RPC effects interpreters (one doing HTTP calls and another returning my mocked stuff). I dislike this copy and paste bit.

Any hints?

Note 2019-02-03: there is similar functionality implemented in

Network.Wai.Test, but it doesn't integrate with Servant so nicely. Understanding the internals of network-related libraries is also particularly useful, because then you can hack various nice features into them. For some unclear reason it comes up with network-related and web-related libraries more often than with other kinds of libraries.

Network-less requests

You should be able to do requests without ever making any networking.

servant-server gives you a WAI Application, which looks like

this:

type Application = Request

-> (Response -> IO ResponseReceived)

-> IO ResponseReceived

A server (likely warp) feeds our Application requests and provides

a way to send back whatever responses the Application wants. However,

you can create your mock server with serve and then just feed requests

to it without starting it up.

Here's how it can work. servant-client's functions can run in anything

that implements RunClient:

class Monad m => RunClient m where

runRequest :: Request -> m Response

throwServantError :: ServantError -> m a

So, our m has to have access to an Application:

type ClientMock = ReaderT Application IO

A slight problem here is that Request and Response come from

Servant, and they can't be passed to Application verbatim. If we

manage to convert between them and WAI's Request and Response, we

have achieved our goal: we can generate client functions for a

Servant-defined API, combine them into RunClient-polymorphic actions,

and run then either against a server or against a mock-Server like this:

main :: IO ()

main = do

-- We can run 'action' in 'ClientM' and talk to a server over the network.

putStrLn "Network calls:"

forkIO $ run 25000 $ serve (Proxy @Api) mockServer

manager <- newManager defaultManagerSettings

let env = mkClientEnv manager (BaseUrl Http "0.0.0.0" 25000 "")

runClientM action env

-- Or we run 'action' in 'ClientMock' against the mock server directly!

putStrLn "No network calls:"

runReaderT action (serve (Proxy @Api) mockServer)

Translating requests

Let's look at Servant's Request versus WAI's Request.

Servant:

data RequestF a = Request

{ requestPath :: a

, requestQueryString :: Seq QueryItem

, requestBody :: Maybe (RequestBody, MediaType)

, requestAccept :: Seq MediaType

, requestHeaders :: Seq Header

, requestHttpVersion :: HttpVersion

, requestMethod :: Method

}

type Request = RequestF Builder -- Data.ByteString.Builder

WAI:

data Request = Request

{ requestMethod :: Method

, httpVersion :: HttpVersion

, rawPathInfo :: ByteString

, rawQueryString :: ByteString

, requestHeaders :: RequestHeaders

, isSecure :: Bool

, remoteHost :: SockAddr

, pathInfo :: [Text]

, queryString :: Query

, requestBody :: IO ByteString

, vault :: Vault

, requestBodyLength :: RequestBodyLength

, requestHeaderHost :: Maybe ByteString

, requestHeaderRange :: Maybe ByteString

, requestHeaderReferer :: Maybe ByteString

, requestHeaderUserAgent :: Maybe ByteString

}

Servant's type is quite a bit more high-level than we'd like. Luckily

servant-client provides a function (somewhere in its internals) to

convert that type into http-client's request type, and that one is

easier to deal with. In fact, the translation becomes boring enough that

I will link to it instead of including it here: toWaiRequest.

Translating responses

There are four kinds of responses the Application can give us:

data Response

= ResponseFile Status ResponseHeaders FilePath (Maybe FilePart)

| ResponseBuilder Status ResponseHeaders Builder

| ResponseStream Status ResponseHeaders StreamingBody

| ResponseRaw (IO ByteString -> (ByteString -> IO ()) -> IO ()) Response

For simplicity I will only handle ResponseBuilder as the most common

one (and the only one servant-server uses unless you enable

streaming, as can be checked via Codesearch).

Servant's response type is, on the other hand, rather pleasant:

data GenResponse a = Response

{ responseStatusCode :: Status

, responseHeaders :: Seq.Seq Header

, responseHttpVersion :: HttpVersion

, responseBody :: a

}

type Response = GenResponse LBS.ByteString

This time the implementation is short enough that I will provide it in text:

fromWaiResponse :: Wai.Response -> IO Response

fromWaiResponse (Wai.ResponseBuilder status headers builder) =

pure Response

{ responseStatusCode = status

, responseHeaders = fromList headers

, responseHttpVersion = HTTP.http11 -- always HTTP/1.1 for servant-client

, responseBody = Builder.toLazyByteString builder

}

Putting it all together

We still need our RunClient instance, and it's not exactly trivial. We

need to get back the Response, but the Application doesn't let us

return anything:

type Application = Request

-> (Response -> IO ResponseReceived)

-> IO ResponseReceived

We can solve this problem by sneakily putting the Response into an

MVar:

instance RunClient ClientMock where

runRequest :: Request -> ClientMock Response

runRequest request = ReaderT $ \app -> do

responseMVar <- newEmptyMVar @Response

let storeResponse :: Wai.Response -> IO Wai.ResponseReceived

storeResponse waiResponse = do

response <- fromWaiResponse waiResponse

putMVar responseMVar response

pure Wai.ResponseReceived

Wai.ResponseReceived <- app (toWaiRequest request) storeResponse

takeMVar responseMVar

throwServantError :: ServantError -> ClientMock a

throwServantError = lift . throwIO

(It's a bit tricky to understand why it looks like this instead of

simpler Request -> IO Response; reading a Reddit thread on this

topic might help. The short explanation is that if the Application

needs to open a resource in order to answer a request, it should also

make sure to close the resource after the response has been sent, even

if the sending operation fails. Thus the sending has to be done inside

whatever bracket-ing the Application does.)

Note 2019-02-03: this code, together with a usage example, lives at monadfix/examples. It has not yet been packaged into a library, partly because it doesn't yet work with streaming and doesn't remember cookies between requests. If you end up using it, feel free to drop a line.

Debugging requests done by servant-client

Client

Client

I have some servant-client code that is giving me a 404. How do I get

the URL it thinks it is hitting?

The error type itself doesn't include that info, and due to the wonders of Servant's auto-generation of everything I'm never constructing a URL I can manually print.

You can render the whole routing tree by using the layout

function.

There's also an implementation via Free that lets you look at the

request: see Servant.Client.Free and Servant

cookbook entry on the topic.

Of course, you can also just hit a web server on localhost and see the request.

Yet another option is modifying the Manager to print all requests

(probably the simplest one if you control the Manager):

newManager defaultManagerSettings

{ managerModifyResponse = \resp -> print resp $> resp }

Writing a simple Read instance with generics

I have a bunch of sum types, some with several dozen members, for which

I need to define Read instances that are simply lowercase versions of

their constructors. I haven't dug into generics yet, is there a quick

way to do this?

Here's an example with generics-eot (a library that

simplifies GHC.Generics by representing everything as Eithers

and tuples). Of course, you can also do it with “pure” GHC.Generics,

but I forget its API after each time I use it :/

Here's how it's going to work:

> data T = Foo | Bar | Qux deriving (Show, Generic)

> greadMaybe "bar" :: Maybe T

Just Bar

First, some extensions and imports:

{-# LANGUAGE ScopedTypeVariables #-}

{-# LANGUAGE TypeApplications #-}

{-# LANGUAGE FlexibleInstances #-}

{-# LANGUAGE FlexibleContexts #-}

{-# LANGUAGE MonoLocalBinds #-}

import Generics.Eot

import Data.Char

If you have a type data T = Foo | Bar | Qux, generics-eot will

represent its constructors like this:

Foo <-> Left ()

Bar <-> Right (Left ())

Qux <-> Right (Right (Left ()))

We can use that to generate a list of all possible values for a sum type, assuming that none of the constructors have any fields:

enumValues :: (HasEot a, EnumType (Eot a)) => [a]

enumValues = map fromEot enumEot -- 'fromEot' converts a

-- generic representation

-- into a value of 'a'

-- | A class for things with several zero-field constructors.

class EnumType eot where

-- | Get all EOT-representations of constructors of the type.

enumEot :: [eot]

instance EnumType Void where

enumEot = []

instance EnumType () where

enumEot = [()]

instance EnumType eot => EnumType (Either () eot) where

enumEot = Left () : map Right enumEot

We can also get all constructor names by using datatype, which

returns information about a type passed via Proxy. Unlike with

GHC.Generics, the metadata in generics-eot is separate from the

representation of the type, which often makes it easier to deal with.

enumNames :: HasEot a => Proxy a -> [String]

enumNames = map constructorName . constructors . datatype

Now we just need to construct a lookup table and we can write a generic

analog of readMaybe (you can put the table into a Map for some extra

performance):

greadMaybe

:: forall a. (HasEot a, EnumType (Eot a))

=> String -> Maybe a

greadMaybe = (`lookup` table)

where

table :: [(String, a)]

table = zip (map (map toLower) (enumNames (Proxy @a)))

enumValues

By the way, generics-eot is pretty fragile and I had spent like ten

minutes fighting with this error:

Couldn't match type ‘GHC.Generics.Rep a’

with ‘GHC.Generics.M1 GHC.Generics.D c0 f0’

arising from a use of ‘datatype’

The type variables ‘c0’, ‘f0’ are ambiguous

I recommend putting code that uses generics-eot into a separate

module, enabling -XMonoLocalBinds there, and optionally moving stuff

into top-level functions instead of where clauses (otherwise it often

just doesn't compile for some reason).

That looks fairly succinct. But in terms of performance my options are either explicitly writing the pattern match for O(1) or using a once-generated map for O(log n)?

GHC does not optimize string pattern matches. You only get jump tables

(or binary trees) with integers and things that can be converted to

integers, e.g. Chars. Everything else is going to be a linear

comparison. A map (or a trie) will actualy be faster than

pattern-matching, starting from certain amount of constructors.

I recommend godbolt.org for inspecting generated assembly, they somehow match it up with Haskell source code and it's cool.

Do you have any resources for how the same would be written with

GHC.Generics? Or generics-sop, or whatever is the go-to

library of choice when you need to smooth some roughness with generic

programming.

You don't need to trouble yourself writing these, I was just wanting to

see some existing resources so I could reference them when I need to

solve a problem. I used the TypeShape library in F# for almost all my

boilerplate needs and I imagine that something similar in Haskell exists

as well.

The generics-sop solution is going to be pretty similar to the

generics-eot one, it's just that instead of Left and Right you'll

have Z and S. As for existing resources:

-

Read the paper by the authors of

generics-sop: “True Sums of Products”, 2014. -

Steal code extensively from their sample packages (

lens-sop, etc).

Update 2018-03-24: Andres Löh provided a

generics-sopsolution on Reddit: https://gist.github.com/kosmikus/76ab73d92fce76091ff7b2401f271649.

“What's your take on the dependently-typed languages landscape?”

Client

Client

So I have a question: what’s your take on the dependently-typed languages landscape?

The context is that we are planning to write some code using a more formal language than Haskell in order to provide stronger correction guarantees and we are not 100% settled on which language/prover to go with.

I have played with Idris, and know a bit of Coq. Then there is Agda, Lean, F*...

I have used Agda, Idris, and recently tried Coq.

My impression of Agda: compiles to inefficient code, doesn't have basic features like Haskell's deriving, no introspection of types (Typeable), no parametric quantifier (they model forall with pi).

My impression of Idris: no type classes (their “interfaces” don't imply coherence), bad type inference, a horribly buggy compiler (I usually got stuck on a bug within a few hours of development, made three attempts to use Idris).

Can't say anything of substance about Coq yet. I've seen a paper about using it to generate statically verified Haskell, this might be interesting to you.

Client

Client

Yeah, I have read the paper too.

So yes, Idris is not really ready for prime time, although it goes a long way towards making DT more amenable to normal programmers like me. Coq has a “large” community, is well supported, with a lot of libraries and tooling AFAICT so it seems a safe choice. I am curious about the situation with Agda.

The answer to “which language to choose” highly depends on whether you're going to actually run code written in a DT language or just want to prove properties of some model.

Client

Client

I don’t plan to run code (yet).

Agda is almost unusable on the run-a-program side – it doesn't have a mature proof-automation machinery. Agda is really good at modelling, meaning you can write a very powerful and convenient eDSL.

I think the most exciting option is -XDependentHaskell (when it's done) if you want to run code. I've read Richard's thesis, a lot of design decisions I liked. Of course, you seem to want doing these proofs sooner than later.

Since you don't want to run code yet, I'd say pick Coq. Not for its technical merits, but because it's the most mature. Proving False in Coq was a big deal, and they patched it up quickly, but in other DT languages it's a regular occurrence because they are experimenting with new things, most of them are research quality. And of course there are tactics for proof automation, and libraries with these tactics, it's a huge deal if you aim to prove anything bigger than toy examples for papers.

Coq has an Agda-like fashion with the equations (or something like this) library. And a lot of powerful tools like Ssreflect which can simplify complicated proofs by several magnitudes. Lean is in the same camp, but is less mature.

Client

Client

This is superficial of course, but I find Coq hard to read... Might be that my Caml skills are lacking.

It is very true that Coq is hard to read. “Writing proofs in Coq is like doing brain surgery over a telephone.”

First time I tried to learn Coq I found its syntax alienating (that was a few years ago). But then I tried it a few weeks ago and found it really pleasant, so it's very subjective. You'll get used to it.

Client

Client

OK, so this kinda confirms that Coq might be a good way to go.

For proving, yes. For modelling Agda is top. I modelled observational type theory and cubical type theory with it, I doubt this can be done in other languages in a usable way.

“Can I use recursion-schemes with bound?”

I have a question regarding recursion schemes and higher-order functors.

I have an AST that, as it turns out, needs more that a couple recursive operations defined on it (substitution, evaluation, partial evaluation, etc). I thought maybe I could save myself some trouble and write them with some recursion-schemes. I've learned that my AST is type-indexed and I'll need a higher-order functor (following the post “Fixing GADTs”), but I don't immediately see a way to define the hfmap for constructors that contain functions.

Is there an alternate encoding of Let and Acc below that would allow me to define instances for HFunctor? If not, is there a concise way to deal with something like recursion-schemes for manipulating such an AST? The main requirement is that I be able to fuse multiple composite operations into single traversals.

data ExpF (c :: Symbol) :: (* -> *) -> * -> * where

LitB :: Bool -> ExpF c r Bool

LitI :: Int -> ExpF c r Int

LitD :: Double -> ExpF c r Double

LitC :: Dense c -> ExpF c r (Dense c)

LitO :: Obs a -> ExpF c r a

Let :: r a -> (r a -> r b) -> ExpF c r b

UFn :: T.Text -> (a -> b) -> r a -> ExpF c r b

BFn :: T.Text -> (a -> b -> bb) -> r a -> r b -> ExpF c r bb

Acc :: (r a -> r b) -> Int -> r a -> ExpF c r b

Variable :: T.Text -> a -> ExpF c r a

You are venturing into a territory where I don't have answers prepared, unfortunately. Let's see. If your goal is to define type-indexed ASTs in a way that allows you to have a unified approach to defining operations, the first thing to realize is that it's an open research problem. One good paper I've seen on the subject is “Type-and-Scope Safe Programs and Their Proofs”, published in 2017.

Regarding using recursion schemes for this purpose, a higher-order functor indeed sounds like something that could work here. I'll report back with my thoughts when I read the blog post you linked.

Okay, I've read the post. That's indeed a reasonable approach, matches what I had in mind when I've heard “higher-order functor” :)

I see that the reason you have functions in your AST is that you're using HOAS. I don't think it's compatible with recursion schemes, at least I haven't seen it anywhere. And you lose other desirable properties with this representation (such as cheap alpha-equivalence checking). Did you consider other strategies to represent binding? Edward Kmett covers them on School of Haskell, with pros and cons.

Oi, another problem that isn't going to be solved overnight, then. I guess I can either find a different encoding of lets and lambdas or I can retool everything to work with bound. I've asked on Reddit if anyone knows about examples of the former. If there's no good solution I suppose I'll have to go with bound.

bound as a library won't play well with recursion schemes either, but you can reuse the general approach.

But I'd say the benefits that bound brings outweigh those of recursion-schemes, if you find yourself choosing.

Yes, I'm sure there are additional benefits. But I understand recursion-schemes (for the most part) already, so there's that... We'll see if anyone gives me an answer.

Can I fuse multiple operations in a single traversal with bound?

That's not something the library provides, no. But I don't think that bound precludes you from writing code in the style of recursion schemes and from getting fusion. Your data type parameter will be used for binding rather than subexpressions, but this doesn't preclude you from having another parameter.

You won't get to reuse existing combinators from recursion-schemes, though.

I don't mind the combinators, they seem to be straight forward to ad-hoc once you get a *Functor on the datatype. I've read that recursion-schemes are difficult to work with in bound because of the polymorphism, maybe it's not so bad.

I also have to consider the fact that I'm going to need to be able to store some sort of state with regards to this AST. With PHOAS, the only way I can see, is to persist all the events that affect the tree separately, and then re-evaluate the AST when we need to figure out what the new current state is (I guess basically replace all lambda terms with data-base positions so that they can be restored). It would be easier to just “dump it all” to a bytestring as one could conceivably do in bound though former approach of event-sourcing with PHOAS has the ability to “replay” the system should something get erroneously computed. I'm not sure which in the end is the stronger case.

What language to learn for dependent types?

Do you guys know any Idris? I'm thinking of reading the Idris book just to get a better grasp of dependent types, and then learn how to use those techniques in Haskell.

I expect I'll need some help at least with that second part, if not the first also.

I recommend Agda if your only goal is to learn about dependent types – it's closer to Haskell, and the compiler is more mature (less bugs). And I know it better than Idris.

That said, I've heard that the Idris book is a good one. So it'll work as well. The basics are the same everywhere, once you grasp pi/sigma types it'll be an easy ride.

Oh, wow. I didn't realize Agda was close to Haskell. Idris looks almost identical.

Idris is very different because of strict evaluation, while Agda is lazy and basically is Haskell on steroids (better type system).

Agda has a neat Haskell FFI, because its main backend compiles to Haskell – a good portion of the generated code is unsafeCoerce, simply because Agda has verified the code already and asks GHC not to bother.

Are there good learning resources?

That's the tricky part. No beginner-friendly ones, unfortunately. So Idris still makes sense.

I managed to learn Agda using the first chapter of the HoTT book and Péter Diviánszky's Agda tutorial. It's terse but good.

Agda also has a well-maintained wiki with a list of tutorials.

Dependent maps with uniques or type reps as keys

Haven't used this package in particular, but I've made a similar thing myself in the past. Let's backtrack a little so I can explain what's happening there.

When you have a heterogeneous collection, the problem you're facing is that you need to know what type of thing you're getting out of it on lookup. In a heterogeneous map (such as DMap), assume you inserted 1 :: Int at key k1 and "Hello" :: String at key k2. Then you do lookup k1 m. What type of value does it return? For this particular map, it's Int, but we know that because we've just inserted it, we keep track of it mentally. The compiler doesn't, unless we encode it in the types somehow.

The keys to a heterogeneous map should somehow determine the types of the values, so when we perform a lookup, we can know what result type to expect. For example, a key could be a Type.Reflection.TypeRep (added in GHC 8.2), then the key itself is a representation of value type, and naturally it determines the result type we get on lookup. Alternatively, we can have a singleton GADT where the constructor determines the type index. But there are cases when we want to generate keys on the fly (something like a singleton GADT with an infinite amount of constructors, if that makes any sense). Then we can use Integers as keys, where each key comes from a supply of unique values. When we generate a new unique key, we assign a type to it, so we can say “Let's generate a new key for a String that I want to store”, and if we do that in IO, we can get something like Tag (Uniq 12414123) :: Tag RealWorld String. From now on we know that whatever is stored at key 12414123 must be of type String, and we know that when we do a lookup as well.

So imagine you have a global GADT like this:

data Tags a where

T1 :: Tags Integer

T2 :: Tags String

T3 :: Tags ...

-- ...

Then getUniq adds a new constructor to this GADT, and returns it. You must not lose it, because you won't get access to it again.

Ok, then it functions as I thought. In terms of losing the keys, however, I can't see much utility in keeping opaque keys around. If I have keys x, y, z, and a DMap, which values do those keys refer to in the map? How do I keep track of it?

I also thought, but did not confirm, that if I added to a DMap with

the following:

tmp :: PrimMonad m => m (DMap (Tag (PrimState m)) (ValidDatum f))

tmp = do

x <- newTag

y <- newTag

pure $

DM.fromList [ x :=> MkValidDatum (Age 24)

, y :=> MkValidDatum (Width 33) ]

and I wanted to access Age at some point down the line, then I could initialize a different DMap with a tag that witnesses the same type and then use that to pull out the original value:

find (Rec dm _) = do

mx <- newTag

let n = DM.fromList [mx :=> MkValidDatum (Width 0)]

Just mv = DM.lookup mx dm

mv

You can't get anything out of the map that you return from tmp, because you've lost the keys. Creating new keys won't let you access the values that are there, even if types match. If you want a type-indexed map, you can use TypeRep for keys.

So, I can use TypeRep as a key for a DMap?

Yes, why not? But you'll have only a single value of each type.

I expect only a single value of each type as the collection is like an “open” record. As to “why not”, I just wanted to confirm that we were still taking about DMaps. I'll give that a go later today.

[...] I've looked at the Reflection module, it looks like I'm going to have to define GCompare TypeRep instances to make TypeRep a valid key. Will try to get to this tomorrow. I also came across type-map which looks to be exactly what I need.

Defining GCompare for TypeRep should be easy, I will create a PR to dependent-map this evening. Here you go: PR #17.

Stack reruns passed tests even with --no-rerun-tests

Client

Client

It looks like Stack does not properly track what tests have passed? It has option --no-rerun-tests that should remember when the tests have passed and avoid rerunning them during stack test, but for me it always reruns the tests, even with no code changes and the previous run having them pass.

Is that a bug or is there something more I need to be doing?

I've investigated and it turns out that:

-

It is a bug in Stack.

-

--no-rerun-testsis broken completely, it can't ever work with Stack 1.6.1, on any project. It's been broken since Nov 4, 2015. The Stack team has noticed only now, apparently. -

There is a workaround: when tests succeed, find a file called

stack-test-successin your Stack directory and change the last byte from 0 to 1 :) Stack should be doing it by itself when tests pass but it doesn't. -

I've asked Michael Sloan what would be the best way to fix the bug. (It's going to be a one-line change, the only question is “what is the best place to put that line”.) I'll ping you when my PR is accepted and the bug is fixed in HEAD.

Update: I've fixed the bug and the next release of Stack (1.6.4 probably) will include the fix. You can also use the stable branch of Stack if you want to.

Client

Client

Very cool! Looking forward to that release then. Thanks a bunch.

2017

What does “injective” mean in the context of type families?

Can you explain what is meant by “injective”? Sometimes I get type errors surrounding that word. I know it has something to do with type families.

Injectivity means that we can determine the input to a function from its output. For example, \b -> if b then 1 else 0 is an injective function, because we can look at its output (1 or 0) and deduce the corresponding input.

Formally, you would say that a function f is injective when f a == f b implies a == b.

Since you are seeing this in the context of type families, this is probably what you are getting from GHC:

NB: `F' is a type function, and may not be injective

In this case GHC is just trying to help you understand why it cannot deduce the necessary type equalities.

The typechecker relies on two facts about type constructors:

-

they are injective:

T a ~ T bimpliesa ~ b -

they are generative:

T a ~ D bimpliesT ~ D

These properties hold for data, data family, newtype, class. They do not necessarily hold for a type synonym or a type family.

When you have a type function with a signature like this:

f :: Num a => Maybe a -> Bool

the compiler needs to know a to find the Num instance for it. When you pass an argument of type Maybe Int to it, GHC uses injectivity of Maybe to conclude that a is Int, and finds an instance for it, and all is well.

But assume the input was a type family:

type family F a where

F Int = Maybe Int

F Char = Maybe Int

f :: Num a => F a -> Bool

Now you pass Maybe Int to f, but GHC cannot deduce that a is Int, because F a ~ Maybe Int for both Int and Char – the F type family is not injective.

Since GHC 8.0.1 you can annotate your type families as injective (see “Injective type families” in the GHC manual). For example:

type family Maybe' a = r | r -> a where

Maybe' () = Bool

Maybe' a = Maybe a

Of course, if the type family is not actually injective, GHC will report a type error.

By the way, you can notice that the syntax of injectivity annotations is modelled after the syntax of functional dependencies. This is no coincidence, and the extension is actually called -XTypeFamilyDependencies. However, it is an immature extension: you cannot have more than one dependency for a single type family, or a dependency that involves several type variables. In addition, there are issues with type families (presented the talk “Constrained Type Families” from ICFP 2017) that prevent GHC from using the injectivity annotation in certain cases.

Wow! That's very clear and helpful! Thank you very much.

I've also looked at GHC sources to understand in what circumstances exactly GHC emits this hint about injectivity. Here's the relevant code:

tyfun_msg | Just tc1 <- mb_fun1

, Just tc2 <- mb_fun2

, tc1 == tc2

, not (isInjectiveTyCon tc1 Nominal)

= text "NB:" <+> quotes (ppr tc1)

<+> text "is a non-injective type family"

| otherwise = empty

and the nearby comment is:

-- (b) warning about injectivity if both sides are the same

-- type function application F a ~ F b

-- See Note [Non-injective type functions]

So, you will be getting this hint whenever GHC tries to unify F a with F b, knowing that F is not injective. The actual error might be something else, this is more of a reminder. Again, from the source:

{-

Note [Non-injective type functions]

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

It's very confusing to get a message like

Couldn't match expected type `Depend s'

against inferred type `Depend s1'

so mkTyFunInfoMsg adds:

NB: `Depend' is type function, and hence may not

be injective

-}

When would one use arrows?

Arrows.

I know nothing about them.

Why would you use them?

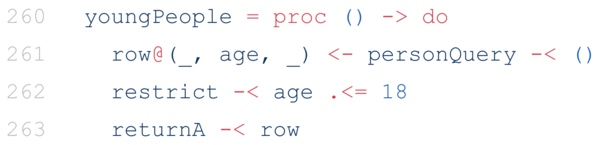

There aren't too many use cases for them. They are used in FRP (see netwire and wires), to form SQL queries (opaleye), but I must admit I don't understand exactly how they are used there (except I know that the author of opaleye mentioned once that if he had to rewrite it from scratch, he wouldn't use arrows).

Update 2018-03-05: the author of

opaleyechimes in on Reddit and says that he didn't quite say that; “There's no getting away from the fact that, in the denotation Opaleye uses, SQL queries are arrows and not monads [...] If I write the tutorial again I might deemphasize arrows. Since Opaleye was written GHC has gainedApplicativeDowhich allows one to write a lot of queries without arrows at all.”

An example of proc-notation in code that uses opaleye

The main advantage you get from arrows is the proc notation, which I haven't really used either. And the proc notation implementation is hardly maintained anyway, Simon talked about it on the mailing list, no one understands how it works in GHC nowadays, especially its interactions with -XRebindableSyntax.

Basically, when you design an API, your type might turn out to be an arrow, and then you get proc-notation, but other than that I wouldn't bother. A sign that your type might be an arrow is that it is a profunctor that supports (->) a b -> p a b.

If you want to understand monads, generally the progression is to first understand Functor and Applicative, because they are morally the superclasses of Monad (even though in the standard library it wasn't the case for a long time). Similarly, to understand arrows you must first understand profunctors, then profunctor composition, and then strong profunctors.

The point is that arrows are a very specific point in the design space. Even profunctors are a rare occasion in code, not to mention strong monoidal profunctors (aka arrows).

I mean, if they weren't in base, they would probably be an obscure library on Hackage that 2.5 people knew about. I tried to learn them a few years ago, I found the original paper (“Generalising Monads to Arrows”) quite approachable, but since then I have seen exactly zero use cases for arrows in my code, so now I don't even remember the specifics. People import Control.Arrow to get tuple combinators :)

And Ed. Kmett refers to them as a “historical accident”.

Thank you for the historical context! I think I'll read through Category Theory for Programmers then, instead of studying arrows.

Machine-readable logs with distinct ADTs for log messages

Client

Client

All my log statements are represented as ADTs. I have many log data types scattered through the code; if I had only one type to capture all log statements, I would have a dependency sink and every time I dot an ‘i’ in log I would have to recompile entire application.

I log in JSON so all those ADTs implement ToJSON and FromJSON. I also have a separate application which takes the logs and converts them back to ADTs, and I have Pretty instances for all those ADTs – to print log statements in human-readable form.

The problem is dispatch. When I read a JSON object I have a string which identifies the type, but my dispatch basically looks like a very long list of:

"LogScheduleTx" -> render (parse logEntry :: Either Err LogScheduleTx)

Is there a better way to do this dispatch?

Okay, how do you envision this tool would figure out which types to try to parse from logs?

-

Some explicit specification, like

type LogTypes = '[LogX, LogY, LogZ]? This list could be at top level, where you don't need to recompile. -

Figure out the types from the scope, i.e. the logic could be “any type with a

IsLogMessageinstance”?

Client

Client

I can have any. I am not sure how the typeclass solution would work but indeed perhaps a type list would do the trick.

Okay, so here's how it could work. First, define a class:

class LogTag t where

logTag :: Proxy t -> Text

All log types would define instances for it:

instance LogTag LogInfoX where

logTag _ = "X"

This is needed because we want to associate a string to each type. This string will appear in the generated JSON to identify the type. Any module that defines a type for logging would depend on this class, but it's unlikely to change, so this would not cause recompilation.

For logging messages, you'd have a helper like this:

logObject :: LogTag t => t -> Aeson.Value

It would construct a JSON object with two fields:

{ tag: "x", message: ... }

and we'd know that any log message has a tag that we can read. If I understand correctly, this is pretty similar to what you have now: you already have a tag that you match on.

The last bit is to define FromJSON and Pretty in a generic way for the type-level list of the messages. You could do it with a union of types in the style of union. Ideally we'd want the following API:

data LogUnion :: [*] -> * -- a sum type for all log types

type LogTypes = '[LogX, LogY, LogZ]

parseLogTypes :: Aeson.Value -> LogUnion LogTypes

prettyLogTypes :: LogUnion LogTypes -> Doc AnsiStyle

If this sounds right to you, I can describe how to implement

parseLogTypes and prettyLogTypes.

Client

Client

This all makes sense. Indeed I have the first part done (I did it with Typeable).

A trouble with Typeable is that the type rep can change between compiler versions, while a string is stable.

Now to the solution. First we need LogUnion, which is trivial to define:

data LogUnion ts where

LThis :: !t -> LogUnion (t ': ts)

LThat :: !(LogUnion ts) -> LogUnion (t ': ts)

Then we'd want to implement FromJSON and Pretty for it. This would require two instances of these classes: the base case and induction.

The base case is an empty list of types. parseJSON would always throw a parsing error (because an empty union is the same as Void, and there are no values to construct). pretty does no job either, because it receives an empty union (which is similar to Void), and this cannot happen at runtime. Thus:

instance FromJSON (LogUnion '[]) where

parseJSON _ = fail "not a valid log message"

instance Pretty '[] where

pretty = \case {} -- see the EmptyCase extension

And the interesting work would be happening in the inductive case:

instance (LogTag t, FromJSON t, FromJSON (LogUnion ts)) =>

FromJSON (LogUnion (t ': ts))

where

parseJSON j = withObject "LogObject" $ \o -> do

tag <- o .: "tag"

if tag == logTag (Proxy @t)

then do

message <- o .: "message"

return $ This message

else

That <$> parseJSON j

Here we check the string tag (or type rep, if you go with the Typeable solution). In case it matches the head of the list, we try to parse the message of this type t. Otherwise we delegate the work to the instance for the rest of the list. Eventually either the tag would match, or we'd get to the base case and fail.

A similar thing for pretty:

instance (Pretty t, Pretty (LogUnion ts)) =>

Pretty (LogUnion (t ': ts))

where

pretty (This t) = pretty t

pretty (That t) = pretty t

“Converting GADTs to JSON without boilerplate?”

Client

Client

We want to log the effects. Basically every time I evaluate an effect I would like to issue a log statement before and just after. It could be done almost without any effort, but our effects are represented with a GADT, and I cannot easly convert it to JSON. Basically for now I have to write things like:

data DBAction a where

AddRequest :: RequestId -> DBAction ()

GetValue :: RequestId -> DBAction (Either Value Value)

PersistEvent :: Event -> DBAction ()

-- more constructors omitted

data LogDBAction

= LogDBActionAddRequest RequestId

| LogDBActionGetValue RequestId

| LogDBActionPersistEvent Event

-- ...

deriving (Generic)

instance ToJSON LogDBAction

instance FromJSON LogDBAction

toStartLog :: DBAction c -> LogDBAction

toStartLog (AddRequest r) = LogDBActionAddRequest r

toStartLog (GetValue r) = LogDBActionGetValue r

toStartLog (PersistEvent e) = LogDBActionPersistEvent e

-- ...

This all is like super redundant information. I have to do it 'cause I don’t see other way to convert this DBAction a to and from JSON.

This is a common problem when dealing with GADTs.

You usually have several representations, which have progressively more info at type level:

-

String– you only know that you have a sequence of characters -

Aeson.Value– you know that these characters represent a valid JSON document -

LogDBAction– you know that this JSON represents a DB action -

DBAction– you know that this DB action has expected input/output types

There should be – in theory – a general way to convert between all these representation providing only minimal info. For example, we can convert between LogDBAction and Aeson.Value using Generic, and between Aeson.Value and String using functions that aeson provides.

The issue is the last gap between representations, LogDBAction and DBAction. Ideally, it should be possible to derive LogDBAction from DBAction, together with conversion functions. In practice, everyone's doing this by hand.

The latest research on this topic that I've seen was a prototype tool that automates this process, I recommend reading their paper for more info (it's fairly well written and understandable without too much background): “Ghostbuster: A Tool for Simplifying and Converting GADTs”. But I am not aware of a tool on Hackage that would do this transformation.

I sent an email to one of the authors of the Ghostbuster paper, asked him if the tool is still in development. When he answers, I will let you know.

Client

Client

Cheers, that’s very informative. Thank you – I will read the paper.

Meanwhile, I have received a reply. The author told me that he and another member of their research team had left Indiana University and the fate of the tool is uncertain.

Don't be afraid of -XUndecidableInstances

[...] My issue is that I had to turn on -XUndecidableInstances to get all the necessary instance constraints to typecheck [...]

Before reading the code, I would like to comment on -XUndecidableInstances. The extension is entirely benign, and is not an indication of a wrong approach. You can treat instance search as a function (you give it a type, it gives you an instance). For example, when GHC tries to fulfill an Ord a constraint and it knows that a ~ Int, this is like calling findMeOrdInst :: a -> Ord a with Int as an argument (hopefully, the analogy makes sense). In this model, each instance is a function clause.

GHC really wants this function findMeOrdInst to terminate, because this search is happening at type level, and we risk non-termination in the type-checker, and a hanging compiler would be a rather unpleasant experience. Of course, due to the halting problem, a perfect termination check cannot exist, so GHC uses a couple of heuristics, which are documented in the GHC manual (“Instance Termination Rules”). When the heuristics reject correct code, we disable them with -XUndecidableInstances.

The worst thing that can happen is that the compiler will hang, but in practice it won't, because there's a limit to the amount of iterations it performs doing instance search, so you'll see an error about too many iterations during instance search. So, basically, -XUndecidableInstances is a small regression to error messages in exchange for more expressive power, which is a good trade-off.

For some reason people try to avoid -XUndecidableInstances, probably because the word “undecidable” sounds bad, or because they've learned that -XIncoherentInstances is bad (which is true) and start confusing the two.

A writeup on digestive-functors

Client

Client

I've tried using digestive-functors for creating forms in my Spock application, but I feel lost.

[The following is a copy of a gist I wrote and sent. It turned out to be longer than I expected.]

In my opinion, digestive-functors is needlessly complicated and obscure (like many Haskell libraries are). It took me three hours to understand how to work with it. However, until things like forma get more popular, that's what we have to work with, I guess. (Oh, and forma is kinda obscure too, though a bit less.)

Writing your own formlets library

I believe that the best way to understand something is to write your own version of it, and with digestive-functors it is seemingly the only way to understand it. Loading the examples into GHCi shouldn't be necessary, just read the explanations.

We want to be able to write forms like this:

userForm = User

<$> "name" .: text Nothing

<*> "mail" .: check "Not an email address" checkEmail (text Nothing)

And then we want to be able to do two vastly different things with them:

-

Ignore all logic in the form and just render its structure.

-

“Run” the form against a list of (field, value) pairs and produce a

Useras the result.

And now, a spoiler: whenever you want to be able to run something and to inspect the structure, it's often going to look like something from the Free-land. In our case, we can simply create a data type with special constructors for pure and <*>, and that will give us a way to represent all form definitions in an inspectable way.

Here's a simplified version of Form from digestive-functors:

{-# LANGUAGE GADTs #-}

import Data.Text (Text)

type Name = Text

data Form a where

Pure :: a -> Form a

Ap :: Form (b -> a) -> Form b -> Form a

TextField :: Name -> Text -> Form Text

Checkbox :: Name -> Bool -> Form Bool

In case you are not familiar with the GADTs syntax, it's the same as:

data Form a

= Pure a

| Ap (Form (b -> a)) (Form b)

| TextField Name

| Checkbox Name

The difference is that the GADTs syntax lets us specify that the TextField constructor can only be used to construct a Form Text, not any arbitrary Form a.

The second two constructors (TextField and Checkbox) are easy – they let us create labelled inputs. (In digestive-functors they can also contain values, weirdly. It doesn't make sense to me, because when you're describing a form it doesn't make sense to also fill it – the values are supposed to be provided by the user. I have spent some time thinking about it and I don't see a good reason for this design, so in my toy realization I'm going to omit this detail.)

The first two constructors are used for sticking together the components of the form. In the next section we'll see how it's done.

Creating a form

We construct forms with <$> and <*>, so our Form must have an Applicative instance and a Functor instance. The Pure and Ap constructors are exactly what we need to write it:

instance Functor Form where

fmap f = Ap (Pure f)

instance Applicative Form where

pure = Pure

(<*>) = Ap

Now we can construct simple forms that become trees of Aps:

registerForm =

User <$> TextField "first_name" ""

<*> TextField "last_name" ""

<*> TextField "email" ""

-- is the same as

registerForm =

Ap (Ap (Ap User (TextField "first_name" ""))

(TextField "last_name" ""))

(TextField "email" "")

Thanks to currying, a chain of any length becomes a nested Ap application. If some elements were complex forms instead of single fields, it would've still been a tree of Aps, though the structure would've been a bit more complex.

Rendering a form

A tree like that is very easy to render – just walk through it and render all fields/checkboxes. I'm going to use printf for simplicity, though in real life you should use lucid or something similar for such tasks.

renderForm :: Form a -> String

renderForm f = "<form>" ++ go f ++ "</form>"

where

go (Pure a) = ""

go (Ap a b) = go a ++ go b

go (TextField name) =

printf "<input type=\"text\" name=%s><br>" (show name)

go (Checkbox name) =

printf "<input type="checkbox" name=%s><br>" (show name)

Evaluating a form

If you have key–value associations from a POST query, you can also easily evaluate a form:

evalForm :: Map Name Text -> Form a -> a

evalForm kv = go

where

go (Pure a) = a

go (Ap a b) = (go a) $ (go b)

go (TextField name) =

fromMaybe "" (M.lookup name kv)

go (Checkbox name) =

M.lookup name kv == Just "on"

For simplicity I'm not going to add any kind of error handling, validation, etc. We could easily add validation by introducing another constructor:

data Form a where

...

Validate :: (b -> Either Text a) -> Form b -> Form a

This is similar to what digestive-functors does.

Using digestive-functors

Now that you (maybe) understand the idea behind digestive-functors, let's see how to use the actual library. There are three main types:

-

type Form v m a– a form that can be rendered or evaluated.-

vis the type for rendered things – e.g.Text,String,Html, or your own custom type. It's used for validation errors, for combobox items, etc -

mis the monad in which validation happens – can beIdentityif all your validation is pure, can be somethingIO-like if you want to e.g. access a DB during validation) -

ais the type that will be returned during form evaluation – in many cases it's going to be something that can be trivially constructed from the fields contained in the form

-

-

type Formlet v m a = Maybe a -> Form v m a– simply a function which can be passedNothingif you want to get a form, orJustif you want to convert a value into form fields (i.e. do the opposite thing to evaluating a form). It is most commonly used to set default values for form fields. As far as I understand, you're not really supposed to createFormlets of your own. Basic primitives provided bydigestive-functorsare all eitherFormlets or something close in shape to them. -

View vis a form that you are supposed to render after thePOSTquery if something went wrong. It consists of aForm v m a, user's input from the previous time they tried to submit the form, and validation errors (that should be displayed to the user when you re-render the form).

Here are some examples of simple forms (which can be combined with <$> and <*>, and have names attached to them with .:):

textInput = text Nothing

intInput = stringRead "Expected an int" Nothing

-- pears shall be the default because I like them more

fruitInput = choice [(1, "Apple"), (2, "Pear")] (Just 2)

If you simply want to apply a function to form's output, you can use

<$>:

cutInput = take 10 <$> text Nothing

You can also use check/checkM to do validation, and validate/validateM to do transformations that can fail.

Once you have a form, you need to convert it into a View and then render it. digestive-functors doesn't do any rendering for you, but it provides two helper functions in Text.Digestive.View:

-

getFormconverts a form into aViewwithout any input or error message -

postFormaccepts a form and a function for getting form fields from the request (of typePath -> m [FormInput]), fills the form, and gives you aViewwith input and error messages and the result value of the form (if it was evaluated successfully)

You are supposed to render the View by yourself – the official tutorial provides an adequate example of doing that. Also, you don't actually have to use getForm and postForm – the Spock-digestive package by the author of Spock abstracts that away. Here's an example:

loginAction :: SpockAction conn Session st ()

loginAction = do

let formView = F.renderForm loginFormSpec

f <- F.runForm "loginForm" loginForm

case f of

(view, Nothing) ->

site $ formView view

(view, Just loginReq) ->

if lrUser loginReq == "admin" &&

lrPassword loginReq == "123" -- hardcoded, yeah

then do

sessionRegenerateId

writeSession (Just $ lrUser loginReq)

redirect "/member-area"

else site $ do

H.alertBox H.BootAlertDanger "Login failed. Try again."

formView view

runForm automatically checks whether it was a GET or POST request (and parses the request and fills the form if it was a POST).

Dependencies installed by Stack aren't being cached

Client

Client

I'll start off with a fiddly build question. We've been using CircleCI, and are trying to sort out caching of the Stack build state. However despite what looks like a correct caching and restore of .stack-work, we're not seeing any actual caching.

On a build with no source changes we get:

foo-1.0.0: unregistering (missing dependencies: foo-util)

foo-lib-1.0.0: unregistering (missing dependencies: foo-test, foo-util)

foo-test-1.0.0: unregistering (missing dependencies: foo-util)

foo-util-1.0.0: unregistering (local file changes: util/Foo/Orange.hs

util/Foo/Pear.hs util/Foo/Apple.hs util/Foo/Banana.hs util/...)

I'm not sure if we're doing something silly and missing something to cache, or if cache save/restore doesn't actually work yet, or if stack is doing something odd.

Can you send your circle.yml config?

Client

Client

Sure, here it is:

version: 2

jobs:

build_and_test: # NB. some parts omitted

steps:

- checkout

- restore_cache: ... # omitted

- run:

name: stack_build

command: stack build --test

- deploy: ... # omitted

- save_cache:

key: dependency-cache-{{ .Branch }}-{{arch}}-{{ .Revision }}

paths: ".stack-work"

Okay, so just to make sure: there's no problem with snapshot deps (from .stack), but caching/restoring of .stack-work doesn't work?

Client

Client

It looks like it partially works. extra-deps are cached fine but it always rebuilds our code from scratch.

Oh, I figured it out: apparently Stack creates .stack-work folders in subprojects as well, so you have to cache them too:

- save_cache:

paths:

- .stack-work

- lib/.stack-work

- util/.stack-work

- test/.stack-work

Also, I asked authors of Stack and apparently currently it's not possible to ask Stack to consolidate .stack-works into one directory, so if you decide to look for a way to do that you should probably redecide :P

Client

Client

Final update: got everything working! Still running tests even without compiling, but drops our noop builds from 13m to 1m.

Does GHC apply functor laws during optimization?

[...] Totally different question, out of curiosity: does GHC apply functor laws in optimization?

Haha, that's one way to solve it.

The previous time I had this question, I didn't find anything :P

Yeah, apparently no Functor-related rules. Of course, GHC could have hard-wired Functor/Monad-related optimizations, but I remember asking someone knowledgeable about that and I got told there was no such thing built-in.

I wonder how much faster Haskell code would be if it were safe to apply such optimizations.

There was a Trac ticket about it. There's a good discussion in the comments.

The issue is that there are unlawful Functor instances out there and optimizations shouldn't break them.

Ahhh, they can add the rules themselves.

That really is a good solution. That puts the onus on library authors to prove correctness before benefiting but doesn't punish code for breaking the laws.

But I wonder why the rules are not written for the functors in base – maybe the rules are in terms of the monomorphic functions instead of fmap?

There are rules for monomorphic functions, such as map, but you don't get these rules applied in a polymorphic setting.

Not even if map got inlined in place of fmap?

That's not polymorphic anymore.

When does GHC inline?

When does GHC inline? I just wrote a tiny function that is merely for convenience to convert things. It really could / should be inlined. Do I need to tell GHC this?

If a function is small and non-recursive GHC will inline it.

Even across module boundaries?

Yes, it puts an optimized version of the function into interface files (.hi) and inlines across modules.

If you add an {-# INLINE #-} pragma it will put an unoptimized version of the function into the .hi file, so you'd want to avoid specifying this pragma unless you need to inline the function body verbatim and optimize at each use site (as opposed to optimizing at definition site).

Ahh, yes that makes great sense, I can see how some cases would optimize much better at call site instead.

But even functions optimized at definition site can still be inlined at call site, correct?

Yes, if they are small and non-recursive.

It also puts a toll on compile times if you use INLINE too much, because optimizing at use site means optimizing multiple times.

Speeding up Template Haskell in GHCJS

[...] I'm using GHCJS and TH makes it go amazingly slow. [...]

Update 2018-03-07: apparently, this has been fixed in GHCJS at some point in the past and now the trick described below doesn't result in any speedup at all (e.g. it brings no speedup on ghcjs-0.2.0.9006020_ghc-7.10.3, which is fairly old already).

Thanks to Niklas Hambüchen for pointing this out!

There's a trick to speed up TH in GHCJS: make it go in one pass. Instead of:

data Foo = ...

makeLenses ''Foo

data Bar = ...

makeLenses ''Bar

try putting everything into one splice:

data Foo = ...

data Bar = ...

do

decs1 <- makeLenses ''Foo

decs2 <- makeLenses ''Bar

return $ decs1 ++ decs2

Or with sequenceA:

concat <$> traverse makeLenses [ ''Foo, ''Bar ]

This is faster because GHCJS starts a Node.js process for each splice. A single splice requires less overhead.

Wow that is an amazing trick! You may have just cut down my build times by a large factor!

Dealing with records in Haskell

How do you guys deal with records? I really like lenses, but I don't really like Template Haskell and makeLenses. I really like generic-lens, but the syntax is so verbose:

record ^. field @"fieldname"

And then I still have the problem with record field names colliding.

Also, I'd love to just get rid of the record field accessors that get created for records. But then I can't construct a record because lenses don't let you construct new records from nothing. I've tried deriving Default... but that's not ideal and in some cases just downright bad. I can imagine a version of generic-lens's field function that allows you to de-mangle the record field name in some way.

You can go about it like this:

declareFields [d|

data Person = Person {

_personName :: Text,

_personAge :: Int }

|]

This gives you lenses called name and age. Names can be duplicated (the lenses are polymorphic), and the actual generated type is not a record so accessors like _personName don't get generated.

If all fields in such a structure are lazy (i.e. without the bangs), you can construct a record with lenses like this (I wouldn't recommend this approach though because if you forget one field the compiler won't warn you):

bob :: Person

bob = undefined

& name .~ "Bob"

& age .~ 24

To be honest, in my experience constructing records from scratch doesn't happen that often so I don't see “you have to use _personName once in a while” as a problem and just use makeFields.

If you have a sensible default or some smart constructor then yeah.

Well, if you have a sensible default it's even better but this doesn't seem that bad either:

return Person

{ _personName = ...

, _personAge = ...

}

It's only bad when Person is AppDbContextHandle and you have _appDbContextHandleName.

Ah, in this case just go the “abbreviate all the things” route :) We do it at work:

data AppDbContextHandle = AppDbContextHandle

{ _adchFoo = ...

, _adchBar = ...

}

Though if you hate it I could write a Template Haskell helper for you that lets you write things like this:

construct [|

let field1 = ...

field2 = ...

in AppDbContextHandle

|]

I'm using beam which doesn't support makeFields so I have to use makeLenses.

The solution with the helper would indeed be nice. However I'm also using GHCJS and TH makes it go amazingly slow. On the other hand, that's not a huge deal because I rarely build the app in GHCJS. So many constraints...

Which is why I like generic-lens so much. No TH (so no slowness) and it obviously works fine with beam since it's just deriving from Generic. But I'd need to use -XOverloadedRecordFields or something.

By the way, there's another solution that uses new -XOverloadedLabels: overloaded-records. You define a record as usual, and then call overloadedRecord:

import Data.Default (Default(def))

import Data.OverloadedRecords.TH (overloadedRecord)

newtype Bar a = Bar { _bar :: a }

overloadedRecord def ''Bar

Then you'll be able to use overloaded labels as lenses for Bar's fields without even having to import anything:

{-# LANGUAGE OverloadedLabels #-}

import Control.Lens ((+~))

add :: Int -> Bar Int -> Bar Int

add n = #bar +~ n

(I suspect there are some caveats though.)

Possibly performance. generic-lens has no performance penalty, but I'm not sure about this library. As far as I know generic-lens has gone through a lot of effort to ensure that it does not impose runtime overhead. Take a look at generic-lens's use of inspection-testing. They “prove” that GHC generates the same lenses that you'd write by hand.

This sounds cool. Although I think they couldn't prove that GHC manages to do that in every case, especially if optimizations kick in a different order (i.e. the lens is inlined first, then its part moved around, etc). inspection-testing checks the generated Core, but of course it can only check what you pass to it, and GHC might generate different Core in different circumstances.

I think I'm in love with the overloaded labels approach to generic-lens described in this tweet. It uses generic-lens-labels. With -XDuplicateRecordFields you can allow the ambiguity which only matters in record updates anyway. But if the only time you use that is to create a new record, then there's no ambiguity.

The only issue I have with overloaded labels is that different libraries have different use cases for them, and those may conflict.

For instance, haskell-gi uses them for FFI fields. Does it conflict with use of overloaded labels as lenses?

Interesting. Yeah, I don't know. I use records so heavily that I'd be willing to sacrifice pretty much every other use though. ;)

Update 2018-03-06: it wasn't mentioned in the original dialogue, but look at the

-XDuplicateRecordFieldsextension which got introduced in GHC 8.0. It, too, makes working with records easier in Haskell.

“Where to start with generics?”

Hi! I would like to do some things with generics but have no idea where to start.

I think the best starting point for understanding generics is understanding the algebra of types. Check out this article series: The Algebra of Algebraic Data Types.

If you're comfortable with thinking about all types as sums and products, you already understand the bulk of generic programming.

Awesome! I'll start there. I find records particularly annoying to work with and things like generic-lens give me hope, but that and similar tools are still a bit too inflexible so I hope to customize them a bit.

If you're particularly curious about generics, I would also recommend reading Andres Löh's lecture notes on modern generics.

You don't really need it if you just want to use generics but if you've already heard about a bunch of concepts (GADTs, type-indexed lists, etc) without being especially familiar with them, it makes for nice bedtime reading and tricks you into understanding the generics-sop library along the way.

Haskell integration with editors

Client

Client

Do any non-Vim/Emacs editors have good Haskell integration?

I would go as far as to say that there's no good editor integration for Haskell at all. There's ghc-mod and the ecosystem around it, but it stops being usable as soon as the project is big enough. It's just too slow. And lots of Haskell tooling suffers from the issue that there's no good package with the Haskell AST (there's haskell-src-exts, but it's just not what the compiler uses, and the discrepancies are often noticeable). Another story is integration with build systems... Honestly, at one point I gave up and decided not to use Haskell tooling, it's broken.

@alanz is doing an immense amount of work to fix that.

NB: I heard from a coworker that Sublime can be set up to work as well as Emacs; if you consider Emacs's integration good, I could ask him for details about his Sublime setup if you're interested.

Update 2018-03-05: the coworker adds that Atom's integration is better than Sublime's, but also that Atom is too slow :/ Also take a look at this chart of Haskell support in various IDEs and editors.

There were discussions in the GHC mailing list about defining an external AST for GHC which could be used by various tooling. They also plan to apply the principles from the “Trees That Grow” paper, which is an amazing idea. All of it is happening right now, and Haskell will get the tooling it deserves sooner or later (but, sadly, "later" sounds more realistic).

Anyway, I'd still recommend hasktags to generate tags. Although it doesn't work very good either (sometimes it misses some definitions, sometimes it indexes non-definitions), but it works 90% of the time and “Jump to definition” is just something that gives an immense productivity boost (even if a bit broken).

One important caveat is that you can't jump to definitions generated by Template Haskell. That's quite obvious in retrospect, because hasktags doesn't run the compile-time code, but might be a bit disappointing when you can't jump to a lens generated by makeLenses or something like that.

And if you use Stack as your build tool, just having stack build --file-watch in a terminal window next to the editor is often sufficient.

Most of the people who worked on tooling such as haskell-mode, ghc-mod, and ide-backend, decided to consolidate their efforts and work on haskell-ide-engine. What's exciting about this is that they plan to support Language Server Protocol. Basically, this means that we'll get Haskell support in all editors that support LSP, so it's going to be N+M rather than N×M.

Client

Client

Ok cool, I’ll try out hasktags tomorrow. I’ll also try haskell-ide-engine with VS Code tomorrow, looks like that’s working?

Things are working up until they break, which might be when (1) the project is too big and the tooling gets painfully slow to use, (2) you need an obscure language extension that wreaks havoc on the tooling.

Especially if it's an extension implemented in the latest compiler version and it's a syntactic addition. When -XLambdaCase was introduced, everything haskell-src-exts-based just stopped working – that's just one example.

Briefly about monad transformers

Client

Client

I've got a kinda generic question. How do monad transformers work?

Well, the reason to use monad transformers is to get the Monad instances automatically. For example, there's no runtime difference between a -> IO b and ReaderT a IO b, but the latter has a Monad instance, whereas the former doesn't. Actually, that's it.

Of course, we could use a function instead:

state -> env -> IO (Either exc (a, state))

But we want a (>>=) that does all the plumbing, and to get this we express the same thing as a transformers stack:

StateT state (ReaderT env (ExceptT exc IO)) a

Client

Client

Ok, that makes sense.

I’ll read that chapter of @bitemyapp’s book on that and ask you if I have any specific questions.

And we couldn't define a Monad instance for a -> IO b because it's unclear whether we want it to behave as ReaderT a IO b or Reader a (IO b). So the structure of transformers layers tells the compiler how deep we want (>>=) to inspect the structure of the types.

(Well, actually, a -> IO b has a Monad instance and it's indeed equivalent to Reader a (IO b) rather than ReaderT a IO b.)

Briefly on Servant vs Yesod

The primary philosophical difference is that Servant tries to do everything using type-level tricks, while Yesod uses Template Haskell a lot. The code looks very different with these frameworks because of that. What's common between them is that they're both wai-based, meaning they can run atop any wai-compatible server (warp or warp-tls), and that's great. I recommend against frameworks that aren't wai-based (this includes Snap and Happstack) because warp is a great web server, it's performant and configurable. Now, I'm not going to give a point-by-point comparison for Servant and Yesod because they're just so different that having such a list might be misleading. For instance, they both support type-safe routing, but it's done in wildly different ways.

I'd say it's useful to know both. If you implement a web API that talks to the front-end using JSON, I recommend Servant. If you want a multi-page web-app that has user authorization and stuff like this, Yesod is the path of least resistance. And since both of them are wai-based (did I mention it's great? well, here's another reason why) you can embed a Servant website as a subsite for Yesod, and vice versa, you can run a Yesod website as part of a Servant API.

Client

Client

I have more experience with Yesod (am a contributor, read a lot of the code) but I’m finding that I’m just ripping out most of it – so I’ll give Servant a try.

I’m a little worried it looks too complex to introduce to beginners.

It definitely is very complex on the inside, if you try to look at the definitions they're monstrous – but I'd claim that using it isn't hard at all. Those definitions are so complex precisely to allow for elegant code that uses them, they try to hide a lot of complexity.

Of course, it doesn't help that Haskell doesn't have good facilities for type-level programming. In a language where functions are promoted and there's proper relevant quantification (might be getting a bit technical here), Servant could be implemented much nicer.

Fixing a memory leak in conduit code

What exactly prevents this Conduit code from running in constant memory?

Roman has filed a PR with a writeup here: https://github.com/reactormonk/non-constant-memory/pull/1. I'm not sure whether it's enough for your purposes or not, so please reply to him if you want a deeper investigation.

[The following is a copy of the writeup linked above.]

My preferred way to profile Haskell programs looks like:

stack build --profile

stack exec -- json-unify-exe \

+RTS -sstderr -N -K1K -A4M -n1M -qb0 -xt -hy -p

hp2ps -e8in json-unify-exe.hp

Running the original version of code results in:

ARR_WORDS is stuff related to ByteString. So a lot of ByteStrings are loaded into memory. The culprit is foldChunks (I cannot say right now what exactly the problem with foldChunks is, it's just my intuition said to me it looks suspicious; I'll think about it), so if we remove the following code (note also that runResourceT is completely redundant here)

allJson <- runResourceT $ runCConduit $ sourceTBMChan trees

=$=& foldChunks 100

=$=& foldChunks 10

=$=& foldChunks 10

=$=& foldChunks 10

=$=& foldlC (\x y -> force $ mappend x y) mempty

Prelude.print $ someStuff $ unfix $ force allJson

and write this instead:

allJson <- runCConduit $ sourceTBMChan trees

=$=& foldlC (\x y -> force $ mappend x y) mempty

Prelude.print $ someStuff $ unfix $ force allJson

we'll get:

Which is the actual leak. But it's a simple one: you just allocate a lot of [] things, i.e. lists. Namely, x and y in \x y -> force $ mappend x y are of the same type: Fix TestType. The Monoid instance for this type is:

instance Monoid (Fix TestType) where

mappend a b = Fix (unfix a `mappend` unfix b)

mempty = Fix mempty

which in the mappend case uses the Monoid instance of TestType:

data TestType a = TestType

{ inner :: [a]

, someStuff :: Int

} | Done

instance Monoid (TestType a) where

mappend (TestType i1 d1) (TestType i2 d2) =

TestType (mappend i1 i2) (d1 + d2)

mappend e Done = e

mappend Done e = e

mempty = TestType [] 0

which itself in the mappend case uses the Monoid instance of []. I.e. valueToTypes generates some lists inside Fix TestType and then you append (and fully force each time!) these lists over and over again just to discard the resulting value via someStuff $ unfix $ ... later. The now obvious solution is to discard lists immediately while folding:

stuff <- runCConduit $ sourceTBMChan trees

=$=& foldlC (\x y -> x + someStuff (unfix y)) 0

Prelude.print stuff

which results in a constant-memory profile:

By the way, I cannot review your conduit code as I'm not very familiar with conduit, but one thing that looks overcomplicated is:

allInDir :: MonadResource m => Conduit FilePath m (Fix TestType)

allInDir = ...

readFile :: MonadResource m => FilePath -> m B.ByteString

readFile f = do

(key, h) <- allocate (openBinaryFile f ReadMode) hClose

res <- liftIO $ B.hGet h 20000000

release key

pure res

What readFile does is allocates a handle, reads the file and releases the handle. I.e. strictly reads a file. But everything related to ResourceT it does just inside this single function, so you could as well write:

readFile :: FilePath -> IO B.ByteString

readFile f = runResourceT $ do